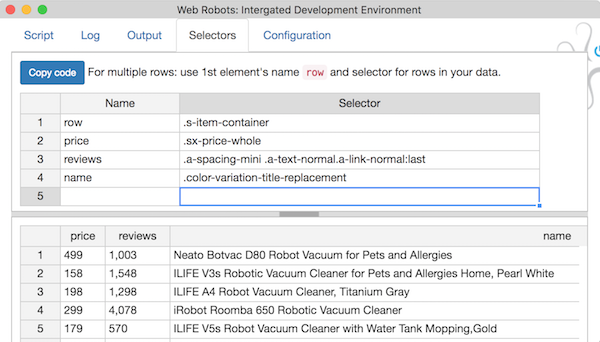

New IDE Extension Release

Today we are releasing an update to our main extension – Web Robots Scraper IDE. This release has a version number 2017.6.20 and has several improvements in UI, proxy settings control, handling hash symbols in URLs.

Version 2017.6.20 RELEASE NOTES

- UI: Robot run statistics is displayed in the same place and no longer “jumping”

- UI: when robot finishes it’s status is a direct link to robot run list on portal. Run link is a direct link to data preview and download on portal.

- setProxy() functionality has been expanded. See documentation for details.

- Bugfix: fixed a bug where subsequent steps with URLs having identical address before # symbol were not loading correctly (Example: http://foobar.com#a and after that go to http://foobar.com#b).

- Other internal engine improvements […]