We often use spidering through categories technique and pagination/infinite scroll when we need to discover and crawl all items of interest on a website. However there is a simpler and more straightforward approach for this – just using sitemaps. Sitemap based robots are easier to maintain than a mix of category drilling, pagination and dynamic content loading imitation.

After all, sitemaps are designed for robots to find all resources on a particular domain.

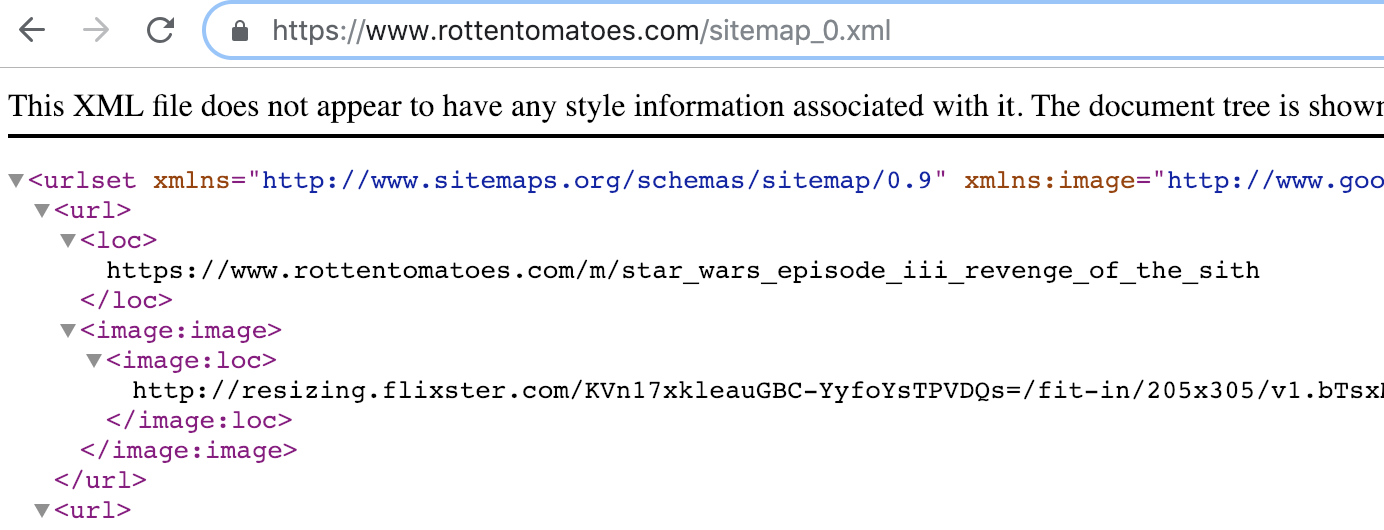

Example of a sitemap:

Finding Sitemaps

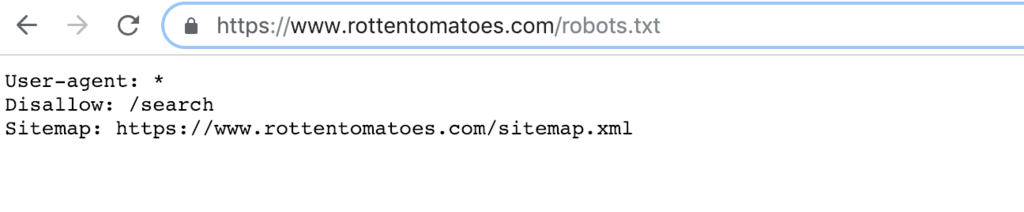

- The fastest way to find a sitemap URL is to check robots.txt file. For example https://www.rottentomatoes.com/robots.txt

- We can also probe typical sitemap URLs like domain.com/sitemap or domain.com/sitemap.xml

- Sometimes just going to the homepage and searching for the keyword “sitemap” works

- If all above bear no fruit, google search can help (example: “target.com sitemap”).

Example of domain/robots.txt:

Working With Large Sitemaps

Sitemaps usually have many thousands of records and opening them directly will freeze Chrome browser for several minutes while browser renders XML. Our best practice is to make $.get request to get a sitemap and process it.

example of getting a sitemap using an ajax request and filtering URLs:

$.get('https://www.rottentomatoes.com/sitemap_0.xml').then( function(response){

$('url loc',response).each( function(i, v){

var url = $(v).text();

// filtering: we only need URLs that have no further path after film name

// we can filter out URLs with longer URL paths than film page has

if(url.split('/').length < 6) next(url,'getFilmInfo');

});

done();

});

Downsides of Sitemap Approach

- A sitemap can be outdated (old URLs leading to 404 pages) and the site owner might not even notice that their sitemaps are incorrect. It is necessary to do spot checks to see if an URL works.

- Sitemap might not have all the items listed in the normal website interface. Best practice is to spot check that items found on a website are present in the sitemap as well.

- Sitemaps do not allow filtering items based on certain criteria. For example if we need only electronics from a large eshop, we still have to crawl all products and do filtering in the back-end.

- Sitemaps do show how popular an item is – for example we cannot infer if a particular item is on the first page in it’s category or somewhere near the end.

I need to try this.